Contents

Get a Personalized Demo

See how Torq harnesses AI in your SOC to detect, prioritize, and respond to threats faster.

To stay ahead of today’s threats, you must do more than keep pace — you need to equip your team with tools that enable smarter, faster responses. For SOC analysts, runbooks in case management systems are essential guides for handling security alerts step-by-step. The prospect of automating these runbooks with AI is enticing, promising to streamline daily operations and free up time for more critical tasks.

However, some are rightfully skeptical. They worry that AI automation could introduce unexpected issues without careful planning and collaboration, potentially hindering productivity and increasing risk. This blog explores how collaborating with AI during planning and setting AI guardrails can enhance predictability, transparency, and trust in AI automation.

The Importance of Runbooks in Security Operations

Runbooks are structured, step-by-step guides enabling SOC analysts to respond to security incidents consistently and accurately. They are particularly crucial for Tier 1 analysts, who often serve as the first line of defense against a high volume of alerts.

These runbooks provide clear instructions for the following:

- Triaging alerts

- Investigating potential threats

- Determining when to escalate issues

By standardizing responses, runbooks reduce human error and ensure efficient handling of all incidents, even in high-pressure situations. Automating runbooks with AI presents an appealing option for scaling operations, accelerating repetitive tasks, and allowing analysts to focus on more complex, high-stakes cases.

The Need for AI Guardrails in Runbook Automation

While automating runbooks with AI is a game-changer, granting AI too much freedom can quickly backfire. Most runbooks are designed with human readers in mind, presenting step-by-step guides that make sense to analysts but can be confusing for AI.

When left to interpret these text-based instructions independently, AI might:

- Misinterpret steps

- Make unexpected decisions

- Produce unintended results

AI can become unpredictable without a structured plan and human alignment, risking accuracy and eroding your team’s trust in automation. A collaborative planning phase to ensure AI guardrails is crucial as it provides SOC analysts visibility into how the AI “interprets” the runbook and plans to automate it. This transparency allows analysts to refine the AI’s approach, ensuring the plan aligns with real-world needs before execution begins.

Collaborative Planning: Aligning AI and Analysts

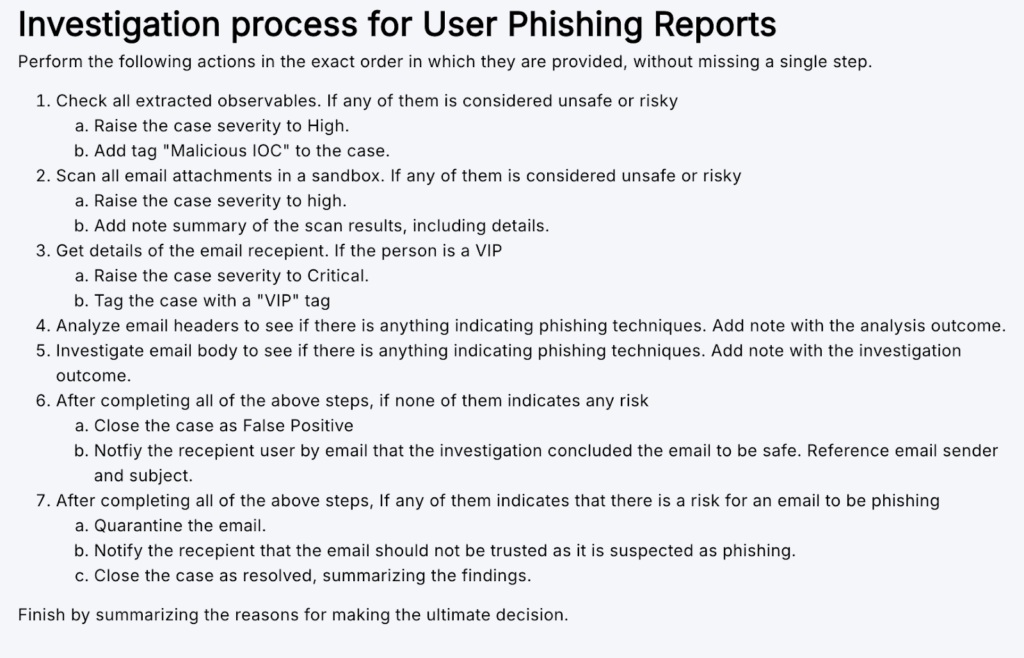

To understand the value of Torq’s approach to runbook automation, let’s consider a common SOC runbook for investigating phishing reports. Such runbooks guide analysts through tasks like checking attachments, analyzing email headers, and escalating incidents when certain conditions are met.

Automating these tasks with AI is more complex than simply running through the steps. Many runbooks are written for human understanding and involve actions that may be ambiguous or beyond direct AI capabilities. Torq’s plan-and-execute approach addresses this challenge by separating the process into distinct planning and execution phases, giving analysts more control and visibility over the AI’s actions.

1. Planning Phase

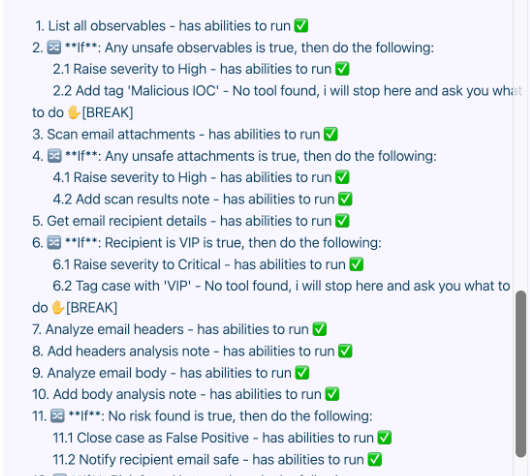

In this phase, the AI:

- Reads through the runbook

- Converts instructions into a structured, transparent plan

- Break down each instruction into clear, atomic steps

- Identifies steps it can automate and those requiring human intervention

- Highlights gaps where it lacks necessary tools or access

This transparency allows SOC analysts to modify the plan, choosing where the AI should pause for guidance or where additional human-defined workflows are needed. In scenarios where full automation isn’t feasible, such as in highly secure or restricted environments, this collaborative planning ensures that the AI aligns closely with human intent and avoids unnecessary errors.

2. Execution Phase

Once the analyst reviews and approves the plan, execution follows this carefully vetted blueprint.

This approach:

- Strips ambiguity and indeterminism from the execution

- Provides transparency and reliability

- Fosters trust in the automation process

Analysts can be confident that AI will follow the exact plan, making the automation more efficient and dependable without sacrificing control or accuracy.

To reinforce the concept further, let’s consider how Socrates, our AI SOC analyst, would function without the ability to add tags while focusing on his communication skills and resistance to AI hallucination.

Socrates, even without the capability to add tags, would still demonstrate its effectiveness in several ways:

Clear communication of limitations: When faced with a task it cannot perform, such as adding a tag, Socrates would explicitly state its limitations. For example, it might say, “I’m unable to add the tag ‘Malicious IOC’ as I don’t have that capability. This step requires human intervention.”

Requesting user input: Socrates pauses the process and asks for user input when the necessary tools or permissions are lacking. This demonstrates its ability to recognize boundaries and seek assistance when needed.

Proceeding with available tools: For steps where Socrates has the required capabilities, it would continue to execute them efficiently. These actions would be marked as completed or “green” in the process.

Detailed explanations: Throughout its analysis and decision-making process, Socrates provides clear, thorough explanations of his reasoning, helping analysts understand its thought process even when it couldn’t perform specific actions.

Suggesting alternatives: When unable to perform a specific action, Socrates might suggest alternative approaches or provide information that could help the human analyst complete the task manually.

Focusing on these aspects can highlight Socrates’ ability to communicate effectively, recognize its own limitations, and resist AI hallucination by not claiming capabilities it doesn’t have. This approach emphasizes AI’s role as a collaborative tool that enhances human decision-making in the SOC rather than attempting to replace human judgment entirely. See what this looks like below:

Strengthening Security Through Transparent AI Collaboration

Trust and transparency are fundamental to building an effective security strategy in today’s rapidly evolving threat landscape. Torq’s AI capabilities prioritize collaboration and clarity, transforming how SOC teams handle automation. By structuring automation as a two-phase process — planning and execution — Torq ensures that AI usage is efficient, bounded by AI guardrails, and aligned with human oversight and intent.

This collaborative approach allows human SOC analysts to:

- Maintain control over automated processes

- Reduce uncertainty in AI actions

- Trust in the predictability and reliability of AI-driven tasks

Fostering a security environment where AI and human expertise work together can strengthen organizations’ SOC capabilities and enhance overall security posture. See Torq’s AI in action — schedule a demo.

Learn more about building trust in AI and how structured, evidence-backed summaries generated by AI enable seamless SOC shift transfers.