Contents

Get a Personalized Demo

See how Torq harnesses AI in your SOC to detect, prioritize, and respond to threats faster.

Staying ahead of evolving cyber threats means more than just keeping up — it means outsmarting the adversary with intelligent, proactive solutions that supercharge your team. This blog kicks off our latest series focused on building trust in AI in Security Operations Centers (SOCs).

As we navigate this new era of AI, Torq recognizes that integrating intelligent systems into existing security workflows is both new and essential. And it can’t be just deploying advanced technology, it’s about building solutions that seamlessly collaborate with your team and earn their trust. Our mission is to create AI systems that enhance efficiency while embedding naturally into daily operations like SOC shift handoffs, ensuring that technology and human expertise work hand in hand.

The Challenge of Relying on Naive Summarizations in SOC Shift Handovers

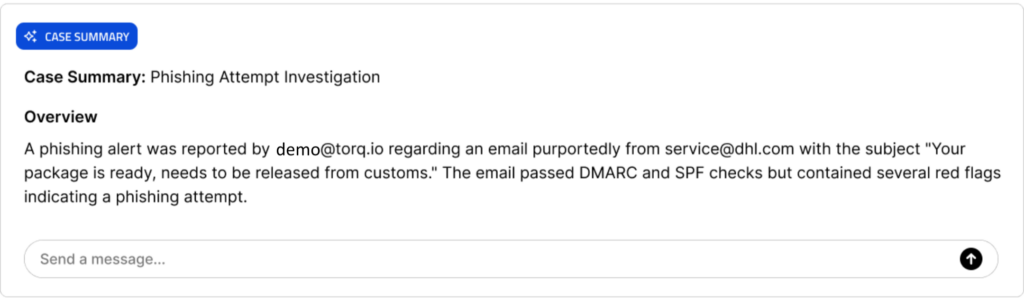

Consider a scenario where an outgoing SOC team provides an AI-generated summary during a shift handover. The summary reads:

“A phishing alert was reported by an employee regarding an email from [email protected] with the subject ‘Your package is ready, needs to be released from customs.’ The email passed DMARC and SPF checks but contained several red flags indicating a phishing attempt.” [figure 1]

At first glance, this summary appears concise and informative, but the trained eye will notice it lacks more critical structure and detail. It doesn’t specify what exactly happened beyond a general phishing alert, when the events took place, or how the conclusion of a phishing attempt was reached. Moreover, it fails to cite any original evidence or analyses that support its findings.

This absence of structured information and verifiable evidence leaves the incoming team with unanswered questions like:

- Which systems were affected?

- What specific red flags were identified?

- Were there malicious attachments or links that need immediate attention?

Without this crucial information, the incoming team may misinterpret the severity of the threat or overlook essential steps needed for mitigation. The lack of evidence-backed details also opens the door for AI hallucinations — incorrect or fabricated information generated by AI — which can mislead the team into focusing on the wrong areas.

Instead of facilitating a smooth transition, the unstructured and unsupported summary creates confusion, delays response times, and potentially allows the threat to persist or escalate.

The Torq Standard: Structured, Evidence-Backed Summaries

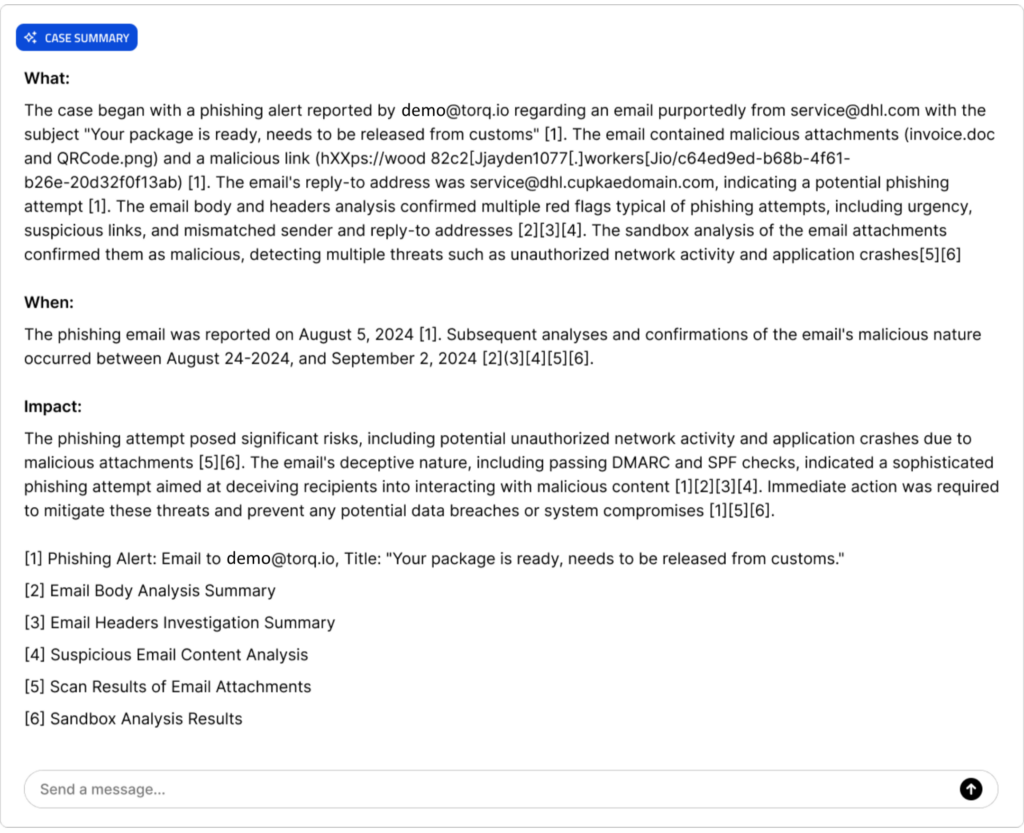

Now, imagine the same scenario we just discussed, this time the outgoing SOC team provides an AI-generated, structured, and evidence-backed summary. The summary is organized into clear sections — What happened, When it happened, and How it happened — each supported by direct citations to original forensic evidence.

“What happened: A phishing alert was reported by an employee regarding an email purportedly from [email protected] with the subject “Your package is ready, needs to be released from customs” [1]. The email contained malicious attachments (invoice.doc and QRCode.png) and included a suspicious link (hXXps://wood82c2[.]jayden1077[.]workers[.]io/c64ed9ed-b68b-4f61-b26e-20d32f0f13ab) [1]. The ‘Reply-To’ address differed from the ‘From’ address, indicating a potential phishing attempt [2].

When it happened: The phishing email was reported on August 5, 2024 [1]. Subsequent analyses and confirmations occurred between August 24 and September 2, 2024 [3][4][5][6].

How it happened: The email passed DMARC and SPF checks, but the discrepancy in the ‘Reply-To’ field raised suspicion [2]. Email body analysis flagged several phishing indicators: a non-legitimate link, a demand for information via a link, a false sense of urgency, and a lack of sender details [3][4]. Sandbox analysis of the attachments confirmed them as malicious, detecting unauthorized network activity and potential application crashes [5][6].” [Figure 2]

Citations:

- Phishing Alert Email received by an employee, dated August 5, 2024.

- Email Header Analysis Report, conducted on August 24, 2024.

- Email Body Content Analysis Summary, dated August 25, 2024.

- Suspicious Email Indicators Checklist, referenced on August 26, 2024.

- Attachment Scan Results from Antivirus Software, dated August 30, 2024.

- Sandbox Analysis Report of Email Attachments, completed on September 2, 2024.

With this structured summary and direct citations, the incoming team can quickly grasp the situation’s full context. They have immediate access to the supporting evidence, allowing them to validate the AI’s conclusions and understand the threat’s specifics without delay. This reduces the risk of misinterpretation and ensures that critical details aren’t overlooked.

The inclusion of citations linking back to original forensic evidence not only mitigates the risk of AI hallucinations but also builds trust in AI-generated insights. Team members can verify each point, ensuring that their actions are based on accurate and reliable information. This structured, evidence-based approach transforms the shift handover into a seamless transition, empowering the incoming team to act swiftly and effectively against the cybersecurity threat.

By adopting this method, Torq has developed AI-based security automation solutions that reflect the analytical thought processes of SOC professionals. The structured summaries not only enhance clarity but also empower team members to validate AI findings, thereby building trust in AI and facilitating more effective collaboration between humans and AI systems.

Strengthening Your SOC with Trustworthy AI

Innovation and trust go hand in hand, especially in the critical field of cybersecurity. The challenges we’ve discussed highlight the necessity for AI solutions that do more than automate — they need to enhance trust, collaboration, and efficiency within your team.

This is where Torq’s AI capabilities become your trusted partner in navigating the complexities of security operations. By providing structured, evidence-backed summaries, AI Case Summaries ensure that every piece of information is transparent and verifiable. It empowers your SOC by enabling team members to work faster, make informed decisions with confidence, and seamlessly transition between shifts. By reducing uncertainty and mitigating the risks of AI errors, it streamlines operations and strengthens your entire security posture.

Together, we’re fostering a collaborative environment where AI and human expertise unite to safeguard your organization more effectively than ever before.