As businesses continue to evolve, automation has become an essential aspect of modern operations. The benefits of automation are numerous, ranging from reducing operational costs to increasing security, efficiency, and accuracy. However, with so many automation solutions available on the market, selecting the right one for your business can be challenging.

As a product specialist at Torq with over a decade of experience in field roles, I have had the opportunity to witness countless businesses on their automation journey, and I want to share some insights with you. At the end of the article, you will have a better understanding of the critical factors to consider when choosing the right automation solution.

1. Business Requirements, Technology Stack, In-House Solutions

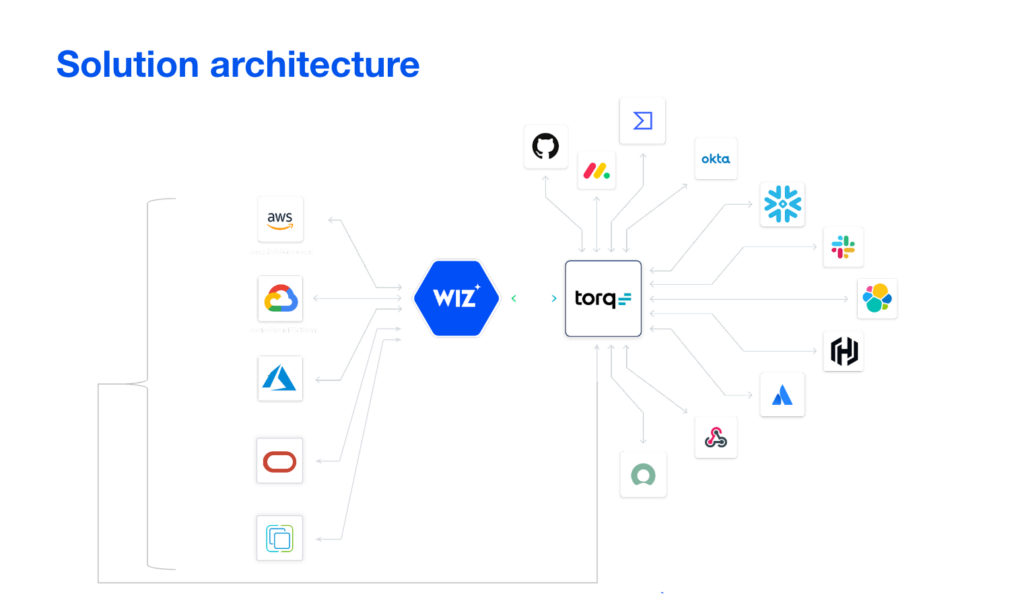

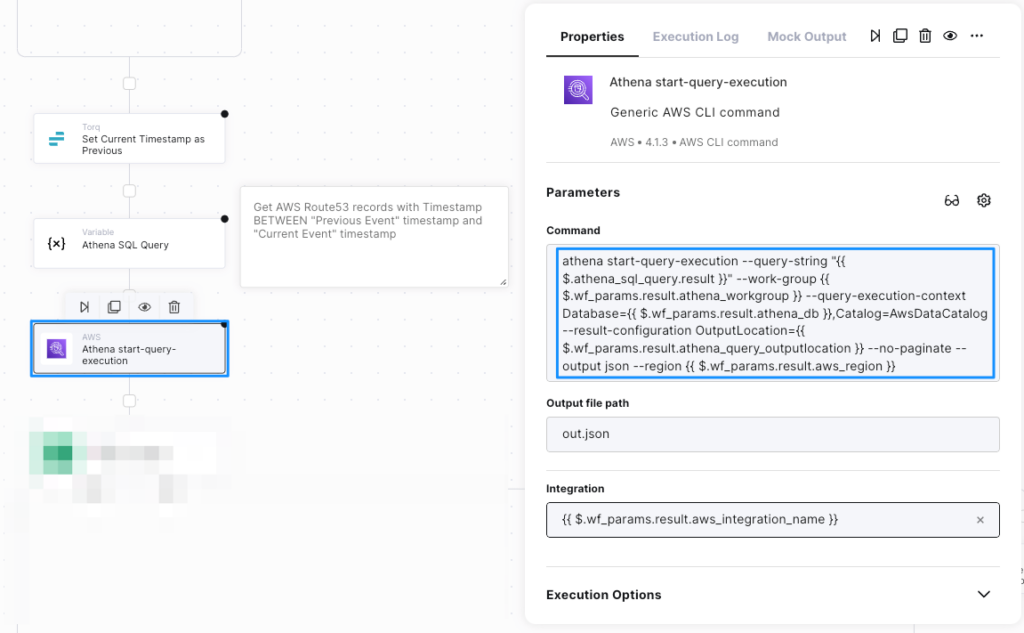

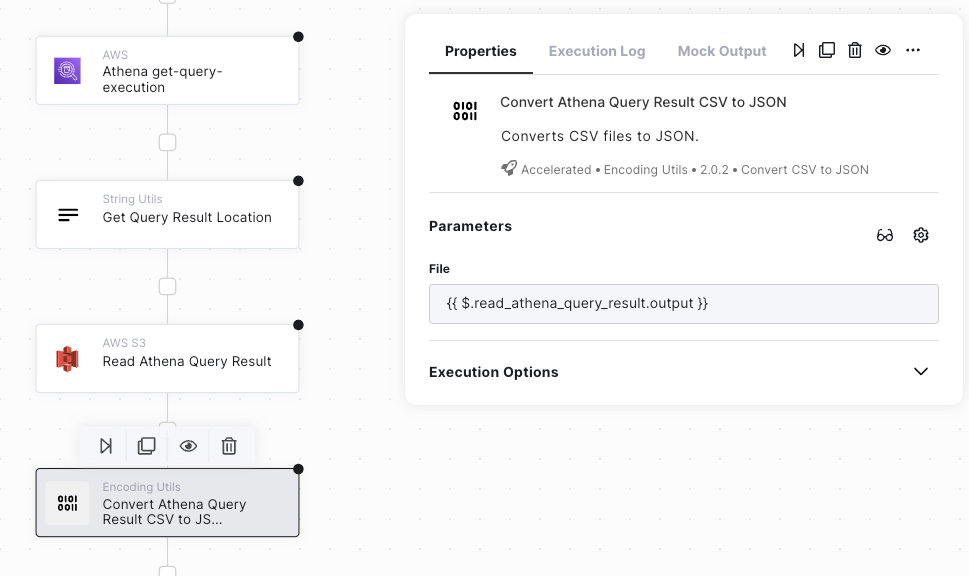

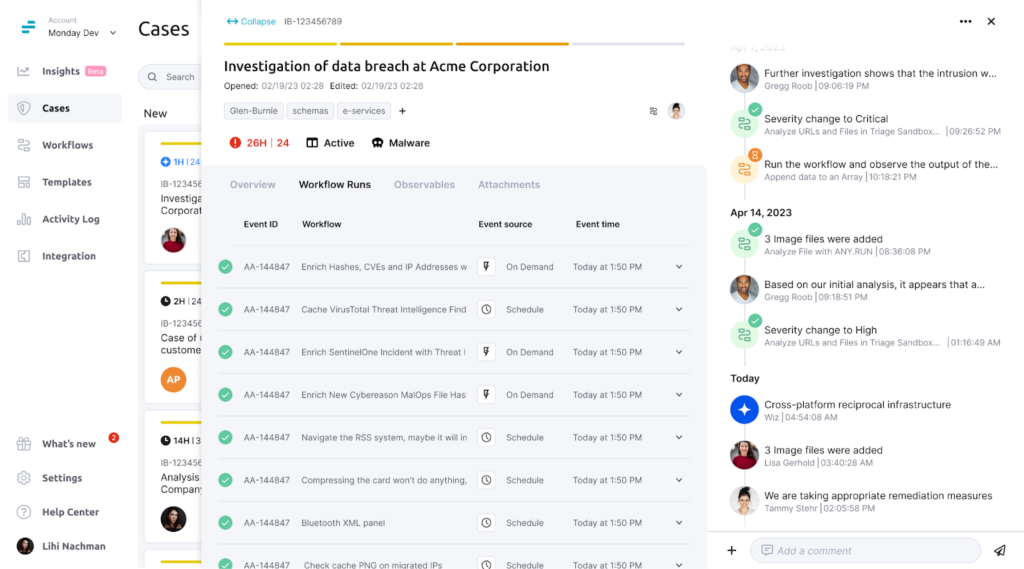

The first step towards selecting the right automation tool is understanding your business requirements. This involves identifying processes that need automation (e.g., repetitive tasks, high error rates) and mapping the technology stack.

It is crucial to evaluate the automation solution’s ability to integrate seamlessly with your stack and to provide out-of-the-box functions relevant to your technology stack, as this improves time-to-value and ROI by enabling simpler automation workflow creation.

It’s also equally important to map your in-house customizations to examine how the potential solution works with them, as without the sync between the two, you will most likely face “unplanned costs,” and it might lead to complete blockers.

2. Out-of-the-Box vs Generic

Flexibility is another important factor to consider when selecting an automation solution. Wait a minute! What do we mean by flexibility? Referring to the balance between out-of-the-box capabilities and generic/customization capabilities. It is essential to ensure that the “out-of-the-box” capabilities can be fully customized; based on my experience, missing even one piece of the puzzle can prevent successful workflow automation, so you better be able to customize it or you might hit the same wall that is killing the legacy SOARs (content and integration creation; “Sorry, we don’t support it”).

However, beware of generic solutions that may satisfy use cases but hinder time-to-value and solution maintenance (for example, vendor API updates). So at the end of the day, having both out-of-the-box and generic capabilities is desirable.

3. POC Time + Success Criteria

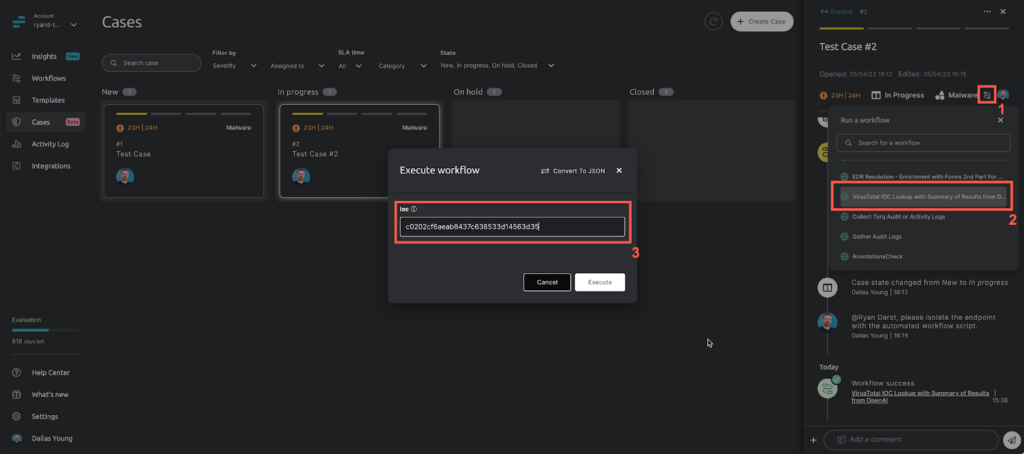

After understanding your business requirements and technology stack, the next step is to evaluate potential automation solutions through a Proof of Concept (POC). The focus is on time-to-value and ROI. Choosing your important use cases and evaluating how quickly and easily they can be fully operational is essential. It is equally important to determine how easy it is for you to accomplish this. It’s time to ask your team to complete a use case from scratch using the potential automation solution.

Here is the specific success criteria for the POC as I see it (I promise it will save you money in the long):

- New content creation

- New integration creation: Out-of-the-box and generic capabilities

- Bring your own code: Relevant when you have legacy code, such as a big in-house solution that you can’t replace right away, or even empowering someone who wants to use code for specific use cases

- Connectivity capabilities: More than APIs (SSH, Bash, SQL, etc)

- Life cycle management: Vendor API updates, production vs testing separation, testing and CI/CD solutions, etc.

- Templates: Out-of-the-box workflows that reflect different use cases

- Content sharing: Workflows and steps, relevant for a super user who helps others

- Compliance: Meet your company’s standards

4. Scale + Vision

Now that we have a candidate that answers all of that (hint: Torq), it’s time to validate what the future looks like. It’s time to focus on scalability, maintenance, and the vendor’s vision. As your business grows, the solution should scale with it. It is essential to choose an automation system that can handle increased data volumes and adapt to changing business needs over time. Selecting a solution from a vendor with a clear vision for the future can be critical to ensuring the long-term success of your automation efforts.

Choosing the right automation tool is a critical aspect of a modern security team. It’s crucial to examine the standout aspects of the solution as it can have a significant impact on your organization, particularly in terms of efficiency, improved security posture, and lowering costs.

While there are many factors to consider when selecting a security automation tool, these essential elements should guide your decision-making process, increasing the likelihood of success. As a product specialist at Torq, we understand the importance of selecting the right automation tool (so we have created a Hyperautomation one), and I hope that this article has provided you with valuable insights to make an informed decision.

Why These Criteria Matter: Real-World Results

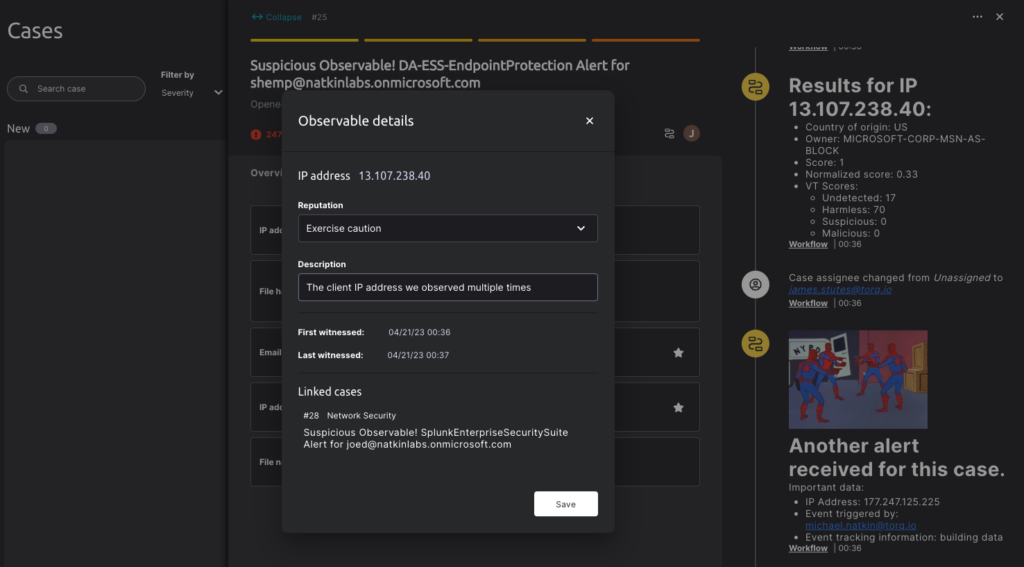

Seamless integration, immediate value: Many security teams waste months trying to connect automation tools to their existing stack — or hit dead ends when vendors say “we don’t support it.” Torq’s 300+ pre-built integrations and AI-powered custom integration builder eliminate these blockers entirely. Fiverr’s VP of Business Technologies puts it simply: “The only limit Torq has is people’s imaginations.”

Flexibility without fragility: Legacy SOAR platforms force teams to choose between rigid out-of-the-box playbooks or complex custom code that breaks with every API update. Torq delivers both: pre-built templates for fast deployment plus no-code, low-code, and full-code customization for any edge case. Riskified transformed efficiency across all five security teams by tailoring workflows to their specific needs.

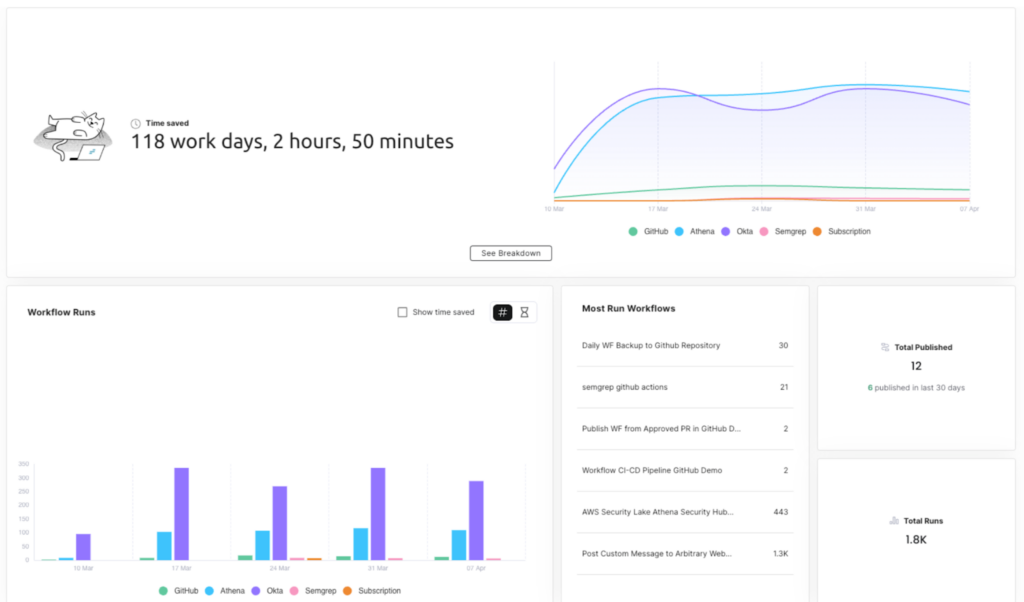

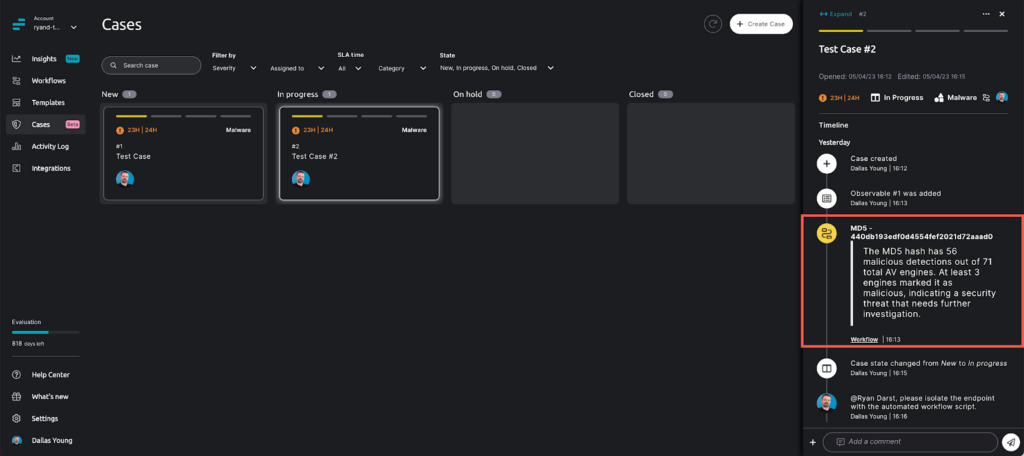

Rapid time-to-value: Long POCs drain resources and delay ROI. Torq deploys in minutes with agentless architecture and intuitive workflow building that doesn’t require dedicated engineering. Carvana automated 41 different runbooks within just one month of deployment, proving that fast POCs translate to fast production value.

Scalability without compromise: Legacy platforms buckle under enterprise-scale alert volumes, forcing teams back to manual workarounds. Torq’s cloud-native architecture scales elastically, handling millions of events without degradation. Carvana’s agentic AI now handles 100% of their Tier-1 alerts, while Compuquip saves hundreds of hours monthly on analysis.

Future-Proof Investment: Choosing a vendor without a clear roadmap means inheriting technical debt. Torq’s vision (AI-native, agentic automation) is already delivering what legacy SOAR promised but never achieved. Valvoline cut analyst workload by 7 hours per day, with Torq continuously evolving to handle emerging threats and use cases.

Making the Right Choice

Selecting a security automation solution isn’t just a technology decision — it’s a strategic investment that will shape your SOC’s effectiveness for years to come. The right platform integrates seamlessly with your stack, balances flexibility with out-of-the-box value, proves itself quickly in a POC, and scales alongside your business.

The wrong choice means months of integration headaches, rigid playbooks that can’t adapt, and a solution you’ll be replacing in two years.

Torq Hyperautomation was built to meet all four criteria: with 300+ integrations, no-code customization, rapid deployment, and enterprise-scale architecture that’s already powering SOCs at Carvana, Fiverr, Riskified, Valvoline, and more.

Ready to see how Torq checks every box?