Contents

Get a Personalized Demo

See how Torq harnesses AI in your SOC to detect, prioritize, and respond to threats faster.

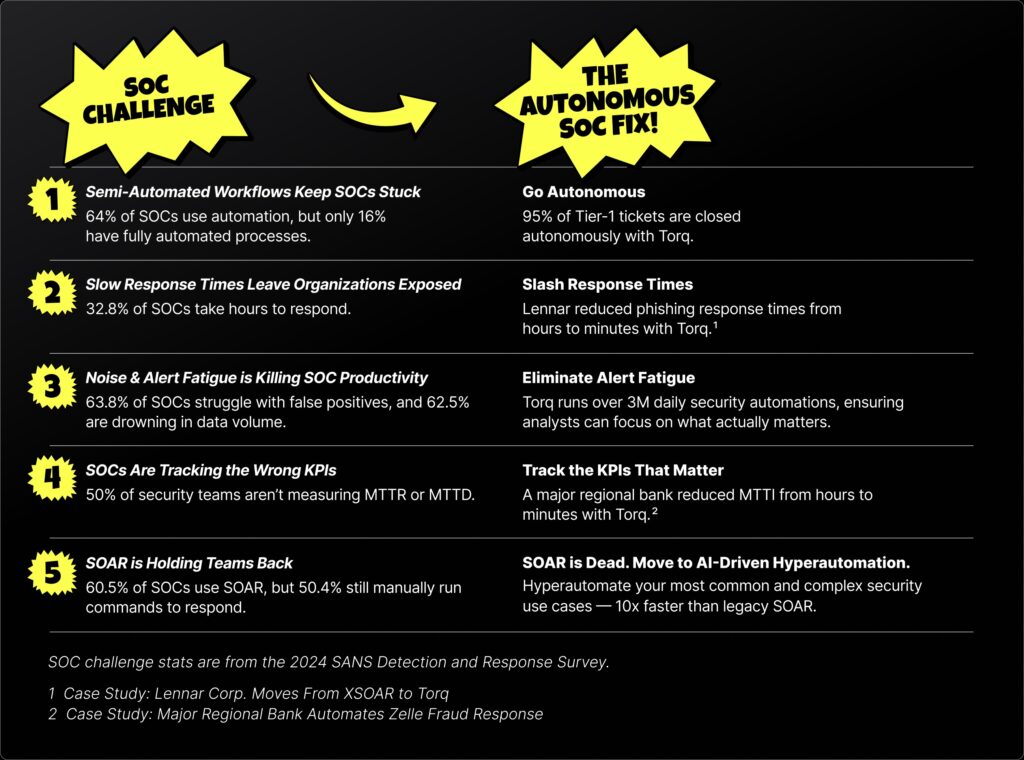

Every second an alert goes untriaged, a misconfiguration goes unnoticed, or a phishing email sits in an inbox, the odds tip in an attacker’s favor. And as threats evolve in speed, stealth, and scale, manual response doesn’t stand a chance.

This guide breaks down the most prevalent types of cybersecurity attacks and how they continue to evade traditional defenses. More importantly, it shows how modern SOCs are automating their defenses with Torq Hyperautomation™ — using AI-powered detection, investigation, and response to identify, prioritize, and eliminate threats at machine speed.

What Is a Cyberattack?

A cyberattack is a deliberate attempt by malicious actors to infiltrate, disrupt, or damage a computer system, network, or digital infrastructure. These attacks are typically launched to steal data, alter or destroy systems, disrupt operations, or expose sensitive information.

It’s not just about stolen data or defaced websites anymore. Today’s cybercriminals and hackers are strategic. They exploit weaknesses across your people, processes, and technology — whether it’s a vulnerable application, a misconfigured cloud setting, or a team member who clicks on the wrong link.

The motivations vary — financial gain, espionage, political disruption, or even pure sabotage — but the outcome is always costly. Successful cyberattacks can cripple operations, exfiltrate customer or proprietary data, and inflict long-term reputational damage. For regulated industries, the fallout often includes hefty compliance fines and legal consequences.

12 Types Cyberattacks — and How to Stop Them with Hyperautomation

1. Phishing & Spear Phishing

Phishing attacks are among the most widespread and successful forms of cyberattacks. They typically arrive via email, SMS, or social media, disguised as legitimate communications from trusted entities. The goal is to lure recipients into clicking malicious links, downloading malware, or submitting sensitive data such as credentials, banking information, or PII.

When it comes to spear phishing, instead of sending mass emails, threat actors craft personalized messages using information about their targets (often scraped from LinkedIn, websites, or previous breaches). This makes them highly convincing and highly effective.

Successful phishing can lead to full account takeovers, data breaches, financial theft, or further malware infections. It remains one of the top initial access vectors for ransomware attacks.

Torq Advantage: Torq Hyperautomation™ integrates with several key partners to help organizations prevent and mitigate phishing attacks and avoid costly data breaches. To defend against phishing attacks, the first line of automated protection is the email inbox. Torq integrates with Secure Email Gateway (SEG) providers like Abnormal Security, Microsoft, Proofpoint, and Mimecast to improve detection and response by correlating insights across platforms. Once a threat is identified, Torq automatically removes malicious emails and enforces updated security controls.

Key tactics include analyzing multiple email attributes for risk signals, detonating suspicious content in sandbox environments to confirm threats safely, and blocking malicious senders and domains organization-wide to stop future attacks.

2. Denial-of-Service (DoS & DDoS)

DoS attacks flood a system or server with traffic until it becomes unresponsive. DDoS attacks (Distributed Denial-of-Service) use networks of infected devices (botnets) to generate massive traffic volumes from multiple sources, making them harder to block.

DDoS attacks can cripple online services, e-commerce platforms, or customer portals, especially during peak hours. While often used to cause disruption, they can also serve as cover for more covert cyber intrusions.

Torq Advantage: Torq defends against DoS and DDoS attacks by integrating with providers like Cloudflare, Akamai, and AWS to automate real-time detection, response, and remediation. Triggered by Cloudflare alerts, Torq workflows can correlate traffic anomalies, block malicious IPs or domains, adjust firewall or rate-limiting rules, and alert teams via Slack, Teams, or ITSM.

3. Spoofing

Spoofing tricks users or systems into believing a malicious source is trustworthy, via email, IP, DNS, or even internal system impersonation. Domain lookalikes and deepfake voice or video are increasingly used in spoofing, particularly in executive impersonation fraud.

Torq Advantage: For impersonation-based spoofing like CEO fraud or Slack impersonation, Torq uses behavior analytics from IAM tools like Okta and Microsoft Entra ID: flag anomalies in sender behavior, access requests, or login locations and auto-enforce multi-factor authentication (MFA), revoke sessions, or escalate for identity verification.

4. Code Injections

SQL Injection (SQLi) is a code injection technique targeting web applications that interact with databases. Hackers can manipulate backend databases by inserting malicious SQL statements into input fields (such as login forms).

SQLi can expose or tamper with sensitive records, bypass authentication mechanisms, and even escalate access privileges. It’s especially dangerous for e-commerce, SaaS, and any app handling sensitive or financial data.

Torq Advantage: Torq protects against malicious code injection attacks by sanitizing all user inputs and workflow data using built-in utilities like jsonEscape and Escape JSON String. These steps ensure malicious payloads can’t be interpreted as executable code during automation. All scripts in Torq run in a secure, sandboxed environment with strict access controls. Torq also applies input validation and enforces least-privilege access to prevent unauthorized actions, even if injection is attempted. Additionally, every workflow execution is logged and monitored, enabling automated responses to any suspicious behavior.

5. Password Attacks

Password-based attacks aim to compromise user credentials. They come in multiple forms:

- Brute force: trying every possible combination

- Dictionary attacks: using common passwords

- Credential stuffing: reusing leaked credentials across services

Weak passwords, lack of MFA, and poor password hygiene make systems more vulnerable. Gaining access to even one user account can allow hackers to escalate privileges, exfiltrate data, or distribute malware internally.

Torq Advantage: Torq helps protect against password attacks by automating identity-centric security workflows across your entire authentication stack. It integrates with IAM, MFA, and SSO providers like Okta, Azure AD, Duo, and Ping Identity to monitor suspicious login activity, such as brute force attempts or credential stuffing. When anomalies are detected, Torq can:

- Automatically block IPs or user accounts after repeated failed logins

- Enforce step-up authentication or password resets based on contextual risk

- Notify SecOps teams via Slack, Teams, or ticketing systems

- Correlate identity signals with threat intel to identify compromised accounts

- Trigger full response workflows, including deprovisioning or re-authentication flows

By automating detection, correlation, and response, Torq reduces the exposure window and prevents password-based compromises from escalating into breaches.

6. Insider Threats

Insider threats arise from individuals within the organization — employees, contractors, or partners — who misuse their access. These threats can be malicious (data theft, sabotage) or negligent (falling for phishing, misconfiguring systems).

Insiders bypass perimeter defenses and often have direct access to sensitive assets. Detecting insider activity is difficult, making this a high-risk attack vector in regulated industries like finance and healthcare.

Torq Advantage: Torq protects against insider threats by automating the continuous monitoring, detection, and response to anomalous user behavior across identity, endpoint, and cloud systems. It integrates with platforms like Okta, CrowdStrike, Microsoft Defender, and data loss prevention (DLP) tools to detect deviations from normal activity, such as unusual login times, excessive data downloads, or privilege escalation.

7. Supply Chain Attacks

A supply chain attack targets third-party vendors, software dependencies, or service providers to reach a larger target organization. Examples include:

- Malware in software updates (e.g., SolarWinds)

- Compromised APIs or SDKs

- Vulnerabilities in third-party platforms or logistics partners

These attacks are stealthy, difficult to detect, and highly scalable. They exploit trust relationships to bypass traditional defenses, often affecting hundreds or thousands of downstream organizations.

Torq Advantage: Torq helps prevent supply chain attacks by continuously monitoring and securing third-party integrations, tools, and services. It integrates with code repositories, SaaS platforms, package managers, and CI/CD pipelines to detect anomalies, unauthorized changes, and known vulnerabilities before they can be exploited.

8. Social Engineering Attacks

These attacks exploit human behavior rather than technical flaws. Threat actors may impersonate executives (CEO fraud), fake emergencies, or build trust over time (pretexting) to trick victims into disclosing sensitive data or performing unauthorized actions.

Social engineering can bypass even the most robust technical controls. It’s often used as the first step in broader attacks like business email compromise (BEC) or ransomware deployment.

Torq Advantage: Torq protects against social engineering attacks by integrating with identity platforms to detect suspicious account activity in real time. When unusual behavior is detected, it can automatically trigger actions like step-up authentication, account lockdown, or escalation to analysts.

Torq enriches alerts with threat intelligence and behavioral context to distinguish real threats from false positives. For confirmed attacks, it automates full response, isolating users, blocking malicious domains, and notifying teams through Slack, Teams, or ticketing systems. This automation reduces analyst fatigue and ensures faster, more accurate responses to identity-based threats.

9. Zero-Day Exploits

Zero-day attacks take advantage of unknown or unpatched software vulnerabilities. Because the vendor is unaware and no fix exists at the time of the attack, these threats are extremely dangerous. Organizations have no immediate defense, making zero-days a favorite tool for sophisticated threat actors. Once disclosed, the race is on to patch before mass exploitation begins.

Torq Advantage: Torq protects against zero-day exploits by automating rapid detection, enrichment, and response workflows across your security stack. When suspicious activity or anomalous behavior is flagged by tools like EDR, NDR, or threat intelligence platforms, Torq immediately correlates the event across systems to determine risk level.

Torq enhances visibility into potential zero-day indicators using real-time enrichment from threat feeds and behavioral data. It then automatically triggers protective actions such as quarantining endpoints, isolating network segments, or blocking suspicious domains. By eliminating manual delay, Torq helps security teams contain and remediate zero-day threats before they can escalate.

10. Malware

Malware (malicious software) encompasses viruses, worms, spyware, trojans, and ransomware. It infiltrates systems to damage, disrupt, or steal data. Ransomware, in particular, encrypts files and demands a ransom payment — often in cryptocurrency — for the decryption key.

Malware is among the most prevalent cybersecurity threats, encompassing various malicious software types designed to compromise and damage systems:

- Viruses attach themselves to legitimate files or programs, spread from one system to another, corrupt or destroy data, and affect system performance.

- Worms replicate independently across networks, rapidly spreading without user action, causing bandwidth overload and potential system crashes.

- Trojans masquerade as legitimate software, tricking users into downloading malware that provides attackers with unauthorized access to systems.

- Spyware secretly gathers sensitive user information, such as login credentials and financial details, without consent.

- Ransomware encrypts data, holding systems hostage until ransom payments are made, severely impacting business continuity.

Torq Advantage: When tools like EDR, SIEM, or email security platforms identify suspicious file behavior, Torq automatically enriches the alert with threat intelligence, correlates it across systems, and launches predefined remediation workflows. These can include isolating infected endpoints, disabling compromised accounts, blocking malicious domains, and alerting internal stakeholders via Slack or ticketing systems.

11. Ransomware

Modern ransomware groups operate like organized businesses, using affiliate models (RaaS) and combining extortion with data leaks to increase pressure on victims.

Ransomware can shut down entire business operations for days or weeks. The financial impact includes ransom payments, incident response costs, lost revenue, and reputational damage. Sectors like healthcare, government, and retail are frequent targets.

Torq Advantage: Torq’s Hyperautomation capabilities help stop the attack early by instantly identifying abnormal encryption activity or lateral movement, triggering actions like locking down file shares, suspending network access, and kicking off recovery protocols. Torq also enables proactive protection by scanning IOCs from threat intelligence sources and applying them across your environment, preventing known malware from ever reaching your systems.

By automating investigation and response from detection to remediation, Torq helps reduce dwell time, minimize damage, and keep ransomware and malware threats from escalating into full-blown business crises.

12. AI-Powered Attacks

AI-powered threats aren’t just more efficient — they’re more deceptive, personalized, and scalable than anything seen before. They mimic humans, adjust based on feedback, and execute attacks autonomously at a scale no SOC could keep up with manually.

AI-powered cyberattacks differ from traditional threats due to:

- Smarter threat intelligence: Unlike static, rules-based attacks, AI-powered threats learn from failed attempts and continuously optimize their strategies.

- Autonomous targeting: AI automates target discovery, scouring public data, social profiles, and exposed assets to pinpoint weaknesses.

- Personalized deception: Spear phishing becomes supercharged. AI customizes phishing campaigns based on granular details about each target, dramatically increasing success rates.

- Deepfake impersonation: AI enables real-time creation of convincing voice or video deepfakes, fueling new forms.

As AI continues to evolve, so do the threats, making intelligent, automated defense essential.

How to Protect Against Cyberattacks

- Employee training: Regularly train staff to recognize and report phishing, BEC, and social engineering threats.

- Multi-factor authentication: Add identity layers like biometrics or tokens to stop attackers, even if passwords are stolen.

- Password policies: Enforce strong, unique passwords and promote password manager use to prevent easy account takeovers.

- Security audits: Run regular audits and vulnerability scans to find and fix security gaps before attackers do.

- Threat detection tools: Use AI-driven tools for real-time visibility and faster threat detection across your environment.

- Incident response plans: Create and test IR plans to act fast, minimize damage, and recover quickly after attacks.

- Automate with Torq Hyperautomation: Eliminate manual tasks with AI-driven workflows that detect, triage, and respond to threats in real time.

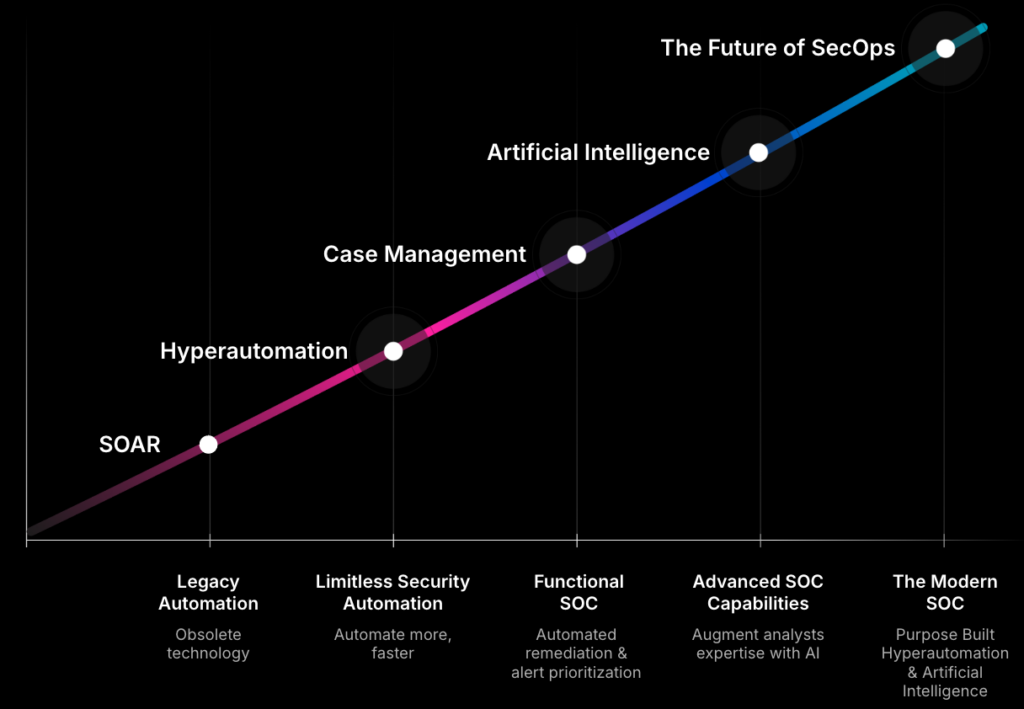

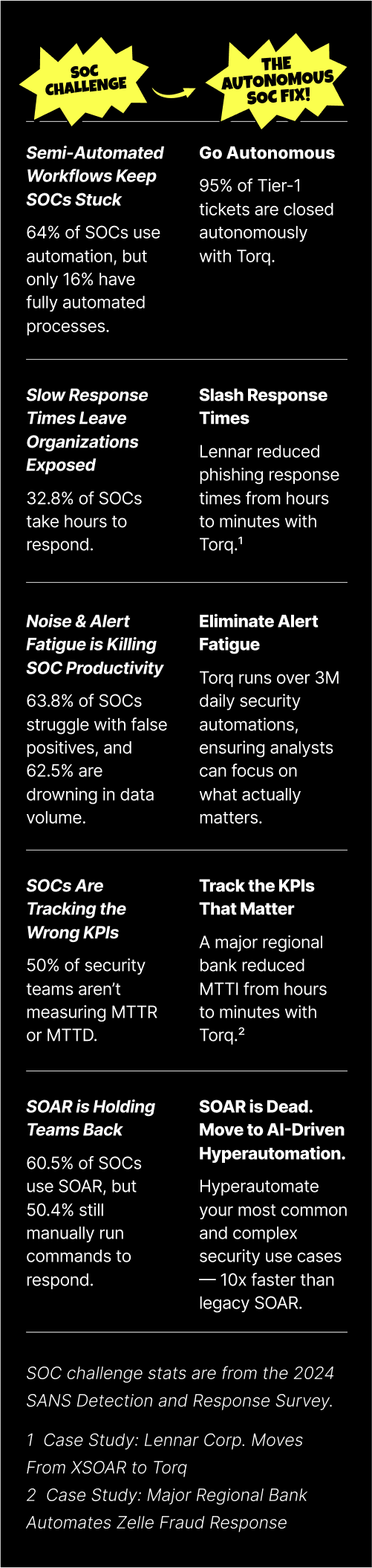

Combatting Cyberattacks with Hyperautomation

The speed, volume, and complexity of today’s cyberattacks have outpaced what humans can handle alone. Traditional security models rely heavily on manual processes — analysts combing through alerts, chasing down indicators of compromise, and triggering containment steps one by one. That’s not just inefficient — it’s dangerous.

By combining intelligent automation with AI-driven decisioning, Torq Hyperautomation empowers security teams to detect, investigate, and respond to threats at machine speed. Instead of drowning in noise, analysts are armed with context-rich insights, automated playbooks, and dynamic workflows that act instantly across their entire security stack.

Here’s how:

- Instant threat detection and prioritization: Torq listens across your entire security stack, continuously ingesting and analyzing signals. Our multi-agent system instantly triages threats based on risk, business context, and policy.

- End-to-end case automation: Torq auto-generates security cases, populates them with all the evidence and context, and assigns them based on team workflows. No more swivel-chair analysis. Just the right response, right now.

- AI-driven investigation and remediation: With Socrates, our agentic AI SOC Analyst, Torq doesn’t just respond; it thinks. It uses real-time threat intelligence, enriches alerts, recommends the next best action, and even auto-remediates incidents across your environment.

Stay Ahead of Cyber Threats with Torq Hyperautomation

Cyberattacks aren’t slowing down, and neither should your defenses. Understanding the most common types of cyberattacks is only the beginning. The real advantage comes from responding faster, smarter, and at scale. Torq Hyperautomation transforms your SOC with intelligent, AI-driven workflows that detect, investigate, and neutralize threats in real time, before damage is done.

Ready to see how Torq can revolutionize your cybersecurity operations with advanced automation and AI-driven security response?