Contents

Get a Personalized Demo

See how Torq harnesses AI in your SOC to detect, prioritize, and respond to threats faster.

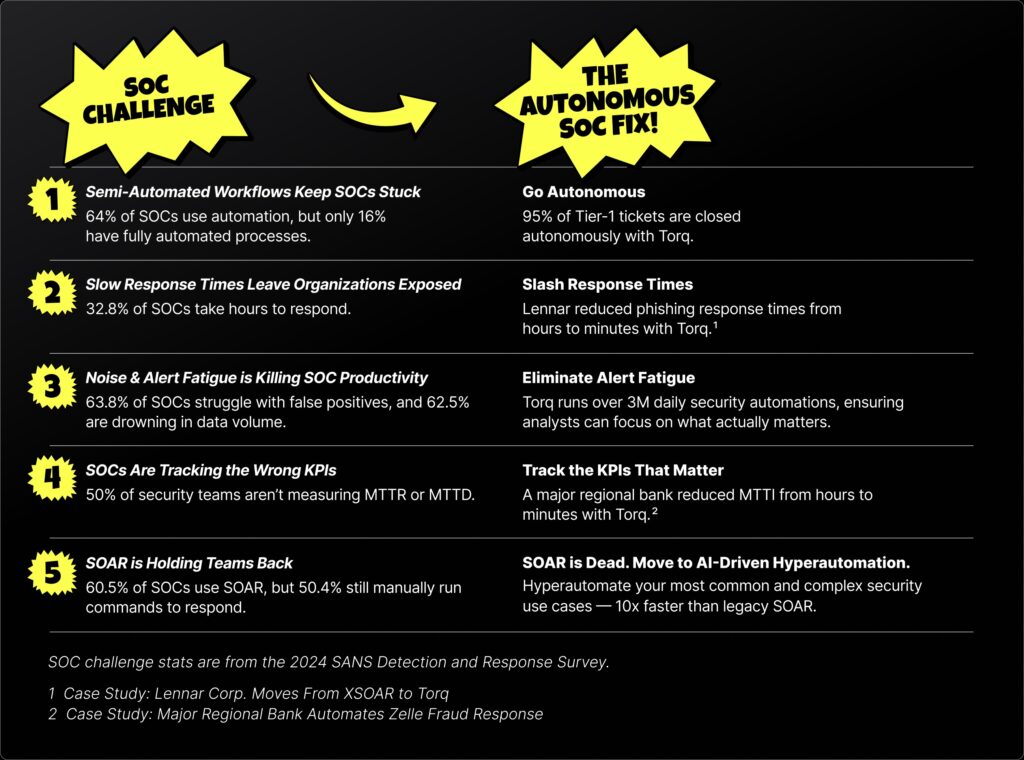

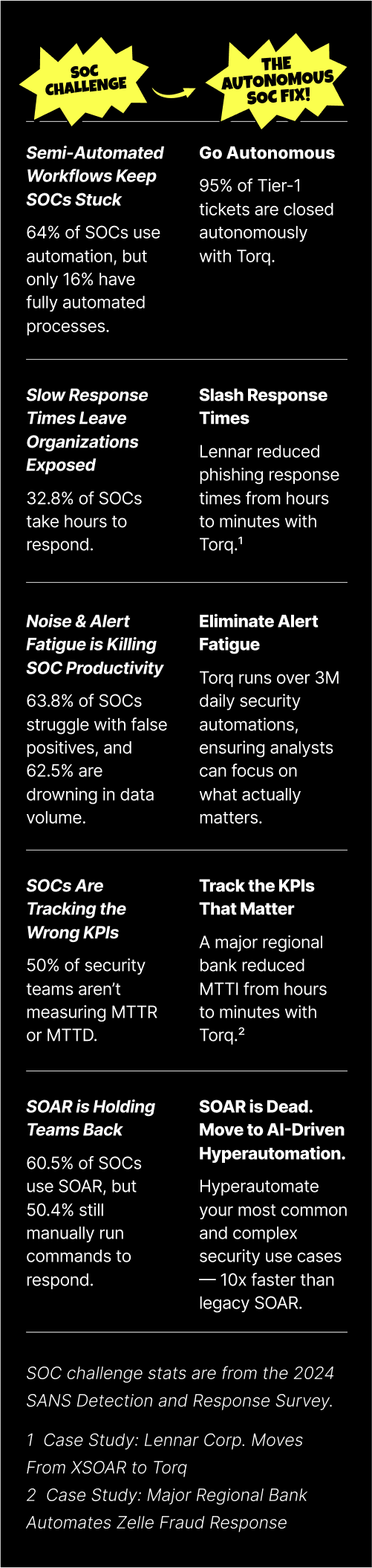

In security operations centers, the sheer volume of alerts can be overwhelming, so sorting out chaos starts with knowing what you’re looking at. Quickly distinguishing between a routine cybersecurity event and a genuine cybersecurity incident isn’t merely a matter of semantics — it’s fundamental to effective defense.

This blog breaks down the most prevalent types of cybersecurity attacks and how they continue to evade traditional defenses. More importantly, it shows how modern SOCs are automating their defenses with Torq Hyperautomation™ — using AI-powered detection, investigation, and incident response to turn security incident categories into action.

What is a Security Incident? (And Why Categorization Matters)

A security incident is an adverse event that compromises the confidentiality, integrity, or availability of a system and can negatively impact an organization. It can signify a breach of security policy, a violation of acceptable use, or a deviation from standard cybersecurity practices that has the potential to harm organizational assets or operations.

Security Incident vs. Security Event

Not every security event is an incident. A security event is any observable occurrence or change within a system or network, such as a login attempt or a file access. A security incident, however, means an event with the potential for negative consequences is actually happening or has happened.

Fifty phishing emails landing in user inboxes? That’s an event. A user replying to a phishing email to share confidential information? Now it’s an incident.

Examples of Security Incidents

- A user clicks on a malicious link in a phishing email

- Malware installed through a fake browser update

- An employee accidentally emailed a sensitive file to the wrong recipient

- A brute-force attack against user login portals

- Unusual traffic spikes that turn out to be a DDoS attack

What are the Two Types of Security Incidents?

Cybersecurity incidents generally fall into two camps:

- Intentional: Think malware infections, privilege abuse, social engineering attacks, or targeted phishing attacks

- Accidental: Misconfigurations, user error, or lost devices (still very much incidents!)

What is an Information Security Incident?

An information security incident focuses specifically on threats to data, such as unauthorized access, exposure, modification, or deletion of sensitive information. If your intellectual property, customer data, or credentials are at risk, it’s in this bucket.

Why Categorizing Incidents is Critical for Consistent Response and Automation

Widely recognized frameworks like NIST (National Institute of Standards and Technology) and MITRE ATT&CK offer authoritative models for incident definition and classification, establishing a common operational language. Other established frameworks, such as ISO/IEC 27035, SANS cybersecurity incident categories, and ENISA guidelines, shape how organizations define and structure types of security incidents, particularly within regulated or global environments.

Categorizing incidents helps cybersecurity teams:

- Rapidly understand a threat’s nature and potential impact

- Triage faster with more context

- Ensure consistent and predictable incident response

- Lay the groundwork for incident response automation

The true power of incident categorization emerges when it informs and enables automated incident response. In the Torq platform, categorization directly feeds into the design and execution of security workflows and dictates escalation paths.

For example, when an alert is categorized as “malware,” an automated response workflow can be instantly triggered. This workflow might automatically isolate the compromised host and dispatch a contextual alert to the SOC team. This systematic approach substantially reduces alert fatigue, allowing security analysts to concentrate on complex, high-priority investigations rather than wading through noise. The result is significantly faster and more consistent response.

The 6 Most Common Security Incident Categories In Enterprise Environments — and How to Automate Them

Let’s break down the usual suspects. Here are the security incident categories you’ll encounter most in enterprise settings and how Torq Hyperautomation speeds up response.

1. Malware and Ransomware

Malware refers to malicious software designed to disrupt, damage, or gain unauthorized access to computer systems. Ransomware, a specific and highly disruptive type of malware attack, encrypts data and demands payment to get it back.

- Example: A finance department user downloads an Excel file disguised as an invoice. Boom. The entire shared drive gets encrypted, accompanied by a ransom demand. This scenario can rapidly halt critical business operations.

- Torq in Action: When malware attacks strike, Torq kicks in immediately — isolating infected endpoints, correlating alerts across systems, triggering EDR scans, and notifying the SOC automatically.

2. Phishing and Social Engineering

Social engineering uses psychological manipulation to trick users to take risky actions or disclose confidential data. Phishing is a type of social engineering that involves deceptive tactics, typically through emails or fraudulent websites, to dupe users into divulging sensitive information.

- Example: An executive receives a highly convincing email seemingly from their bank, requesting immediate account verification. Clicking the embedded link directs them to a spoofed website, where their login credentials are harvested and then used for unauthorized financial transactions.

- Torq in Action: Torq integrates with leading secure email gateways to flag risky messages, strip malicious links, and remove phishing attempts before users click. Confirmed phishing attacks trigger user notifications and IOC enrichment instantly.

3. Unauthorized Access and Privilege Misuse

Unauthorized access occurs when an individual gains entry to systems or networks without permission. Privilege misuse describes a situation where a user with legitimate access privileges abuses those permissions to access or exfiltrate data outside the scope of their authorized duties.

- Example: A recently departed employee’s account remains active. Days later, the sensitive project documentation is downloaded.

- Torq in Action: Torq automatically revokes stale credentials, triggers re-authentication flows, and alerts identity teams when suspicious access patterns emerge.

4. Insider Threats (Accidental and Malicious)

Insider threats originate from within an organization. They can be accidental, such as an employee inadvertently misconfiguring a critical server, or malicious, where an employee deliberately seeks to harm the organization, perhaps through intellectual property theft or system sabotage.

- Example (accidental): An employee uploads sensitive information like customer data to a personal Google Drive for convenience.

- Example (malicious): A disgruntled contractor copies or steals intellectual property before offboarding.

- Torq in Action: Torq monitors user behavior across endpoints, identity platforms, and cloud systems to detect anomalies. Unusual activity like bulk file downloads, strange login times, or privilege escalation? Torq flags it and launches workflows to lock accounts, revoke access, and notify HR and legal — automatically.

5. Denial-of-Service (DoS/DDoS)

A Denial-of-Service (DoS) attack aims to render a service unavailable by overwhelming it with excessive traffic or requests, thereby preventing legitimate users from accessing it. A Distributed Denial-of-Service (DDoS) attack achieves the same objective but utilizes multiple compromised systems, making mitigation significantly more challenging.

- Example: A botnet sends millions of requests to your login page, knocking it offline.

- Torq in Action: Torq integrates with tools like Cloudflare and Akamai to detect traffic anomalies, block bad IPs, and notify your team.

6. Data Breaches and Exfiltration

A data breach is unauthorized access to sensitive, protected, or confidential data. Data exfiltration is the unauthorized data transfer from a system or network to an external destination. (A data breach usually comes before exfiltration).

- Example: A healthcare provider discovers that protected health information (PHI), including patient identities and medical histories, has been accessed and copied by an attacker.

- Torq in Action: Torq listens for signs of exfiltration — like abnormal API calls or outbound traffic spikes — then correlates them across systems. If the signals stack up, it kicks off investigation, isolates affected systems, and accelerates breach response.

How Subcategories and Signals Drive Better Detection

Security incident subcategories enable much finer detection capabilities and facilitate highly targeted responses. Think of a crime scene investigation: rather than simply labeling an event as a “burglary”, categorizing it as “forced entry during specific hours” provides far greater context and detail.

Cybersecurity Incident Subcategories That Add Granularity

Some common sub-types of security incidents include:

- Business Email Compromise (BEC): This is a subcategory of social engineering where an attacker impersonates a senior executive to deceive an employee into conducting unauthorized financial transfers or disclosing sensitive data. (You know the one: “Hey, it’s your CEO. Quick favor: I’m stuck in a board meeting and really need five $200 Apple gift cards for a client thing. Text me the codes ASAP, thanks! 🙏”)

- Keylogger: This subcategory of malware is designed to record user keystrokes, potentially capturing credentials and confidential information.

- Credential Stuffing: This is a subcategory of account compromise in which stolen username/password pairs (often sourced from unrelated data breaches) are used to gain unauthorized access to user accounts.

Detection Cues: Precursors vs. Indicators

Two types of signals help with detecting cybersecurity incidents.

- Precursors = Early warning signals: These signals suggest an attack may be imminent, offering an opportunity for proactive intervention or prevention. For example, a sudden surge in failed login attempts against a critical system, or an unusual volume of emails containing suspicious attachments.

- Indicators = Evidence of compromise: These represent direct evidence that a cybersecurity incident has occurred or is actively underway. For example, unauthorized file modifications on a critical server or a dormant account suddenly logging in from an atypical geographic location and exfiltrating substantial data volumes.

Security Incident Categorization Best Practices

To ensure effective categorization of security incidents, organizations should implement standardized frameworks like the NIST Cybersecurity Framework. Regular training for security personnel to familiarize them with the latest threats and incident types is crucial.

Additionally, utilizing Torq Hyperautomation™ can streamline the categorization process, allowing teams to focus their efforts on high-priority incidents.

Common Challenges in Security Incident Categorization

Despite having a robust categorization framework in place, organizations often encounter security incident categorization challenges such as a lack of real-time visibility into incidents or inadequate data for analysis. This is where Torq Hyperautomation™ shines, providing immediate insights and automating responses based on categorized incidents.

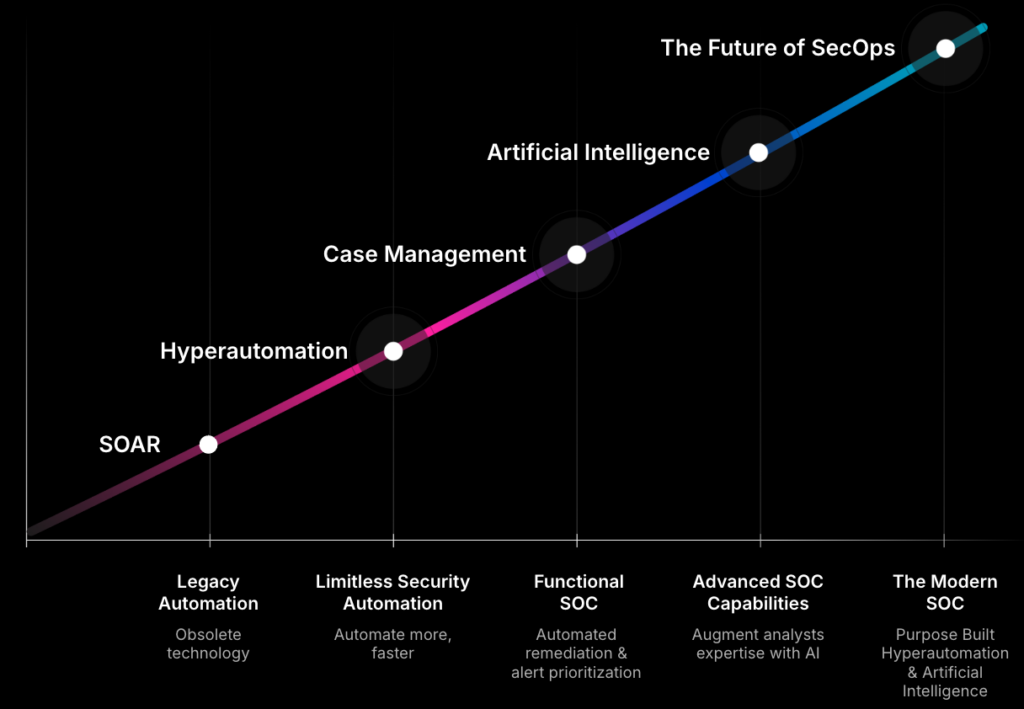

Categorization Is the First Step Toward Autonomous SOCs

Effective security incident classification isn’t merely a procedural step. Security incident categories enable intelligent triage, which fuels automation and accelerates response — all critical for building scalable, autonomous SOCs that can handle modern threat volume and complexity.

Learn how your SOC can move from reactive alert clickers to a strategic value center in the Don’t Die Manifesto.

FAQs

Categorizing security incidents speeds up everything — from understanding threats to triggering the right response. Clear categories help teams triage smarter, tailor responses, prioritize efforts, and allocate resources effectively. Additionally, accurate categorization lays the groundwork for automation, enabling organizations to implement specific automated responses that minimize reaction time and lessen the impact of cyber threats.

Torq Hyperautomation streamlines the incident detection and identification and rapidly correlates security incidents with historical data — so your team doesn’t have to start from scratch every time an alert fires.

Absolutely. New threats = new security incident categories. That’s why staying current on security incident categorization frameworks to account for changes in the risk landscape is important. Staying agile in your response strategy ensures that your incident management system remains relevant and effective.

Well-trained employees are your first line of cybersecurity defense — especially against cybersecurity incidents like phishing and accidental leaks. Educating employees about common security threats and ensuring they understand how to recognize and report potential security events not only decreases security incident occurrence but also speeds up incident response and fosters a culture of security awareness.

Track metrics like response times (MTTR), accuracy of tagging, and resolution rates. If things are moving faster across the board, you’re on the right track.

Organizations should adopt a proactive approach that includes regular threat assessments and engaging with cybersecurity communities to stay informed on emerging threats. Keep tools current, threat intel flowing, and security incident response playbooks updated with what you’re learning.

It comes down to impact. Look at the number of compromised systems, the sensitivity of the data involved, legal implications, and business disruption. Use a risk framework to guide the incident response plan based on the severity of the security breach.

SIEMs, EDRs, and threat intel platforms all help with security incident categorization — especially when integrated with something like Torq to drive automated incident response.

Log the what, when, who, and how of the cyber incident. Torq makes it easy with AI-generated case summaries to help teams analyze trends and sharpen response over time.

Smarter AI, adaptive frameworks, and real-time categorization that evolves as threats change. Torq’s already building toward that future.